Describe it. Build it. Ship it.

Business owners chat. Developers code. AI agents build.

Same project, real-time, one workspace.

Business owners describe features in chat. Developers review the code. AI builds it all in real-time.

Built on open research from Blankline

Read our papers on agentic systems and recursive swarms

Real-Time Multiplayer Chat

Your whole team. One AI.

Every message shared.

Everyone chats with the same AI in real-time. When Priya asks for a login page, Marcus already has that context. The AI remembers Day 1 decisions on Day 100.

Add a login page with Google sign-in and redirect to dashboard after login.

Created login page with Google OAuth. Added redirect to /dashboard.

Now add Stripe payment to the checkout — use the same auth session from the login.

Integrated Stripe in checkout.ts. Reusing existing Google OAuth session as requested.

SHARED CONTEXT: Marcus didn't need to explain authentication. The context has full workspace history from Priya's interaction 2 minutes ago.

Trusted by engineering teams

The context engine.

Powering the next generation.

Dropstone powers software development for teams that demand precision, scale, and shared intelligence.

"We moved our entire core banking infrastructure to Dropstone. The D3 Engine's ability to maintain context across 400+ microservices without hallucinating is something we haven't seen in any other tool."

"Horizon Mode changed how we ship. I describe the feature in chat, and three autonomous agents coordinate to build the frontend, backend, and tests simultaneously. It feels like I cloned my best senior dev."

"The multiplayer aspect is widely underrated. Being able to have my product manager, myself, and an AI agent all in the same editor session fixed our 'lost in translation' issues overnight."

"Other tools forget what we discussed yesterday. Dropstone's Workspace Memory actually learns our architectural patterns. It stopped suggesting libraries we banned months ago."

Different

Context Engineering.

We didn't just wrap an API. We published the research on retrieval-augmented code generation.

Semantic Memory Indexing

Graph-based memory system that maps relationship density between files, retrieving context with 99.8% precision.

Dynamic Context Pruning

Real-time AST analysis prunes irrelevant code branches before inference, reducing token usage and hallucinations.

Beyond Retrieval-Augmented Generation: Solving the Temporal Event Horizon for Unlimited Context

Blankline Research Integrity Council

Role-Based Access

Everyone sees what they need.

Nothing more.

Like Figma adapts for designers vs developers, Dropstone adapts for business owners vs engineers. Same workspace, different interfaces.

Business View

CHATFor founders & non-technical teams

Chat with AI in plain English. See a live preview of your app being built. Never touch code.

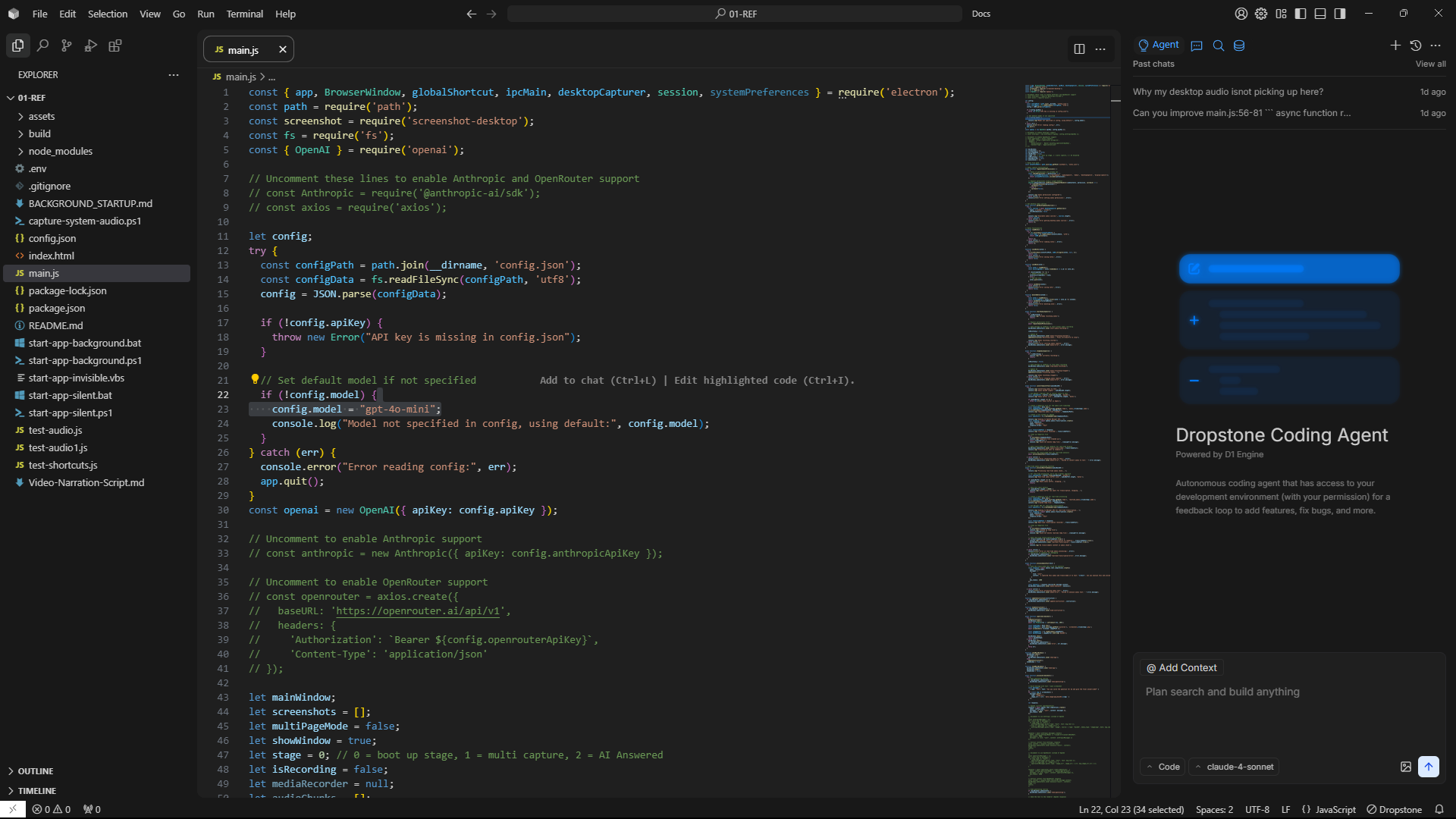

Developer View

</>For engineers who want full control

Full VS Code-compatible editor, terminal, git integration. Review AI-generated code before it ships.

AI Agent View

AITransparent agent activity

See what agents are working on, modified files, test results, and security scans — in real-time.

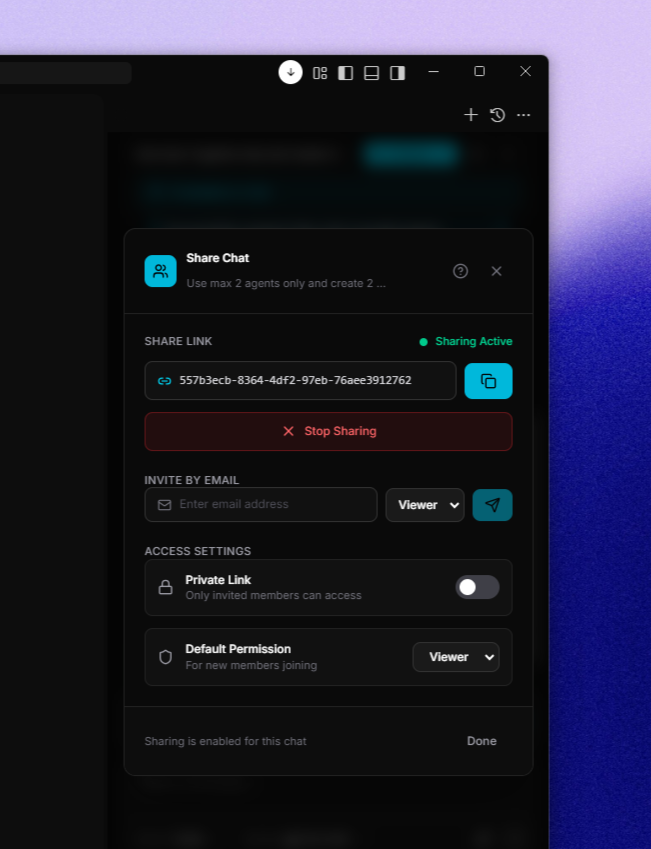

Share with one link.

Control who sees what.

Generate a shareable link to any workspace. Business owners get the chat view. Developers get the code view. Everyone stays in sync without stepping on each other's toes.

Think Figma. Designers and developers look at the same file but see different interfaces. Nobody questions whether Figma is “for designers or developers” — it's for both.

Background Agents

Your AI workforce

never sleeps.

While your team focuses on strategy, background agents explore multiple solutions in parallel — testing for bugs and security flaws before showing anyone the result.

25

parallel agents

1000s

solutions tested

100%

pre-verified

How it works

Explore

Agents explore multiple solution paths simultaneously, testing different approaches to your request.

Validate

Each solution is tested for bugs, security flaws, and edge cases before anyone sees it.

Deliver

Only the best solution is presented — verified, reviewed, and ready for your team.

10 agents included with Pro. 25 agents with Team plan.

Pricing

Simple, transparent pricing

From free local inference to full team compute. Pick what fits.

Free

Open-source experimentation and local model inference

Compute

- 50 fast requests per day

- Unlimited local models (Ollama)

Runtime

- Linear inference only

- Standard context window

Collaboration

- Unlimited active share links

- Viewer-only mode

- Real-time file collaboration

- Real-time editing previews

Pro

Deep reasoning and infinite context for professional engineers

Compute

- Unlimited local and fast models

- ~750 frontier requests (Claude 4.5, GPT 5.2)

Horizon

- Scout swarm exploration

- L1-L2 adversarial verification

- Impact analysis engine

Memory

- Advanced contextual understanding

- Personal learning weights

Collaboration

- Unlimited share links

- Viewer & Editor access modes

- 1 concurrent editor per session

- Real-time collaboration

- Live editing & preview

Teams

Shared state and autonomous verification for engineering teams

Compute

- ~2,250 frontier requests (3x)

- Pooled team credits

Horizon

- Flash protocol instant pruning

- Shared failure vectors

- L3-L4 sandboxed verification

Collaboration

- Unlimited share links

- Viewer & Editor access modes

- Unlimited concurrent editors per session

- Real-time collaboration

- Live editing & preview

Governance

- SSO and SAML integration

- Advanced audit logging

Your entire team. One workspace. Ship 10x faster.

Founders describe. Developers build. AI accelerates. Join 5,000+ teams building software without boundaries. No credit card required.